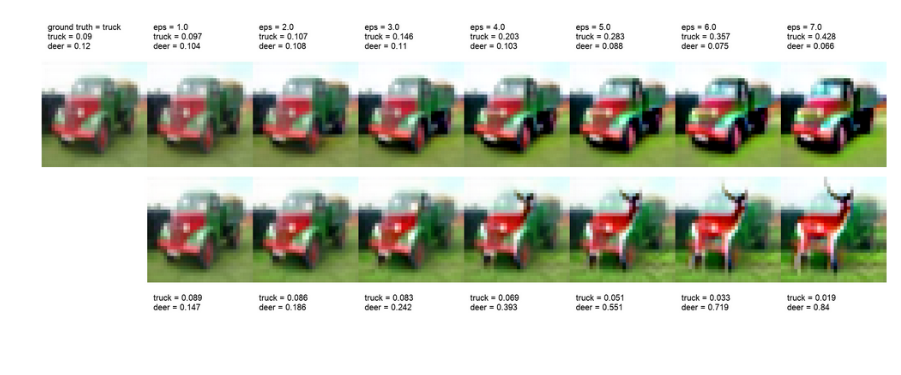

Visualizing Adversarial Attacks on DNNs

For a computer science group project, I have been part of a project to visualize adversarial attacks on Deep Neural Networks (DNNs). The goal of the project was to understand how adversarial attacks work and how they can be visualized.

Link to Github: [Placeholder, will be updated soon.]

This post is licensed under CC BY 4.0 by the author.